oke-autoscaler is an open source Kubernetes node autoscaler for Oracle Container Engine for Kubernetes (OKE). The oke-autoscaler function provides an automated mechanism to scale OKE clusters by automatically adding or removing nodes from a node pool.

When you enable the oke-autoscaler function, you don't need to manually add or remove nodes, or over-provision your node pools. Instead, you specify a minimum and maximum size for the node pool, and the rest is automatic.

The oke-autoscaler git repository has everything you need to implement node autoscaling in your OKE clusters, and a comprehensive step-by-step work instruction.

Introduction

Node autoscaling is one of the key features provided by the Kubernetes cluster. Node autoscaling provides the cluster with the ability to increase the number of nodes as the demand for service response increases, and decrease the number of nodes as demand decreases.

There are a number of different cluster autoscaler implementations currently available in the Kubernetes community. Of the available options (including the Kubernetes Cluster Autoscaler, Escalator, KEDA, and Cerebral), the Kubernetes Cluster Autoscaler has been adopted as the “de facto” node autoscaling solution. It’s maintained by SIG Autoscaling, and has documentation for most major cloud providers.

The Cluster Autoscaler follows a straightforward principle of reacting to “unschedulable pods” to trigger scale-up events. By leveraging a cluster-native metric, the Cluster Autoscaler offers a simplified implementation and a typically friction-less experience to getting up and running with node autoscaling.

The oke-autoscaler function implements the same approach to node autoscaling for OKE as the Cluster Autoscaler does for other Kubernetes implementations (i.e. using unschedulable pods as a signal). Read-on for a more detailed overview of how oke-autoscaler functions.

About oke-autoscaler

The oke-autoscaler function automatically adjusts the size of the Kubernetes cluster when one of the following conditions is true:

- there are pods that have failed to run in the cluster due to insufficient resources;

- nodes in the node pool have been underutilized for an extended period of time

By default the oke-autoscaler function implements only the scale-up feature, scale-down is an optional feature.

Oracle Container Engine for Kubernetes (OKE) is a developer friendly, container-native, and enterprise-ready managed Kubernetes service for running highly available clusters with the control, security, and predictable performance of Oracle’s Cloud Infrastructure.

OKE nodes are hosts in a Kubernetes cluster (individual machines: virtual or bare metal, provisioned as Kubernetes worker nodes). OKE nodes are deployed into logical groupings known as node pools. OKE nodes run containerized applications in deployment units known as pods. Pods schedule containers on nodes, which utilize resources such as CPU and RAM.

Overview

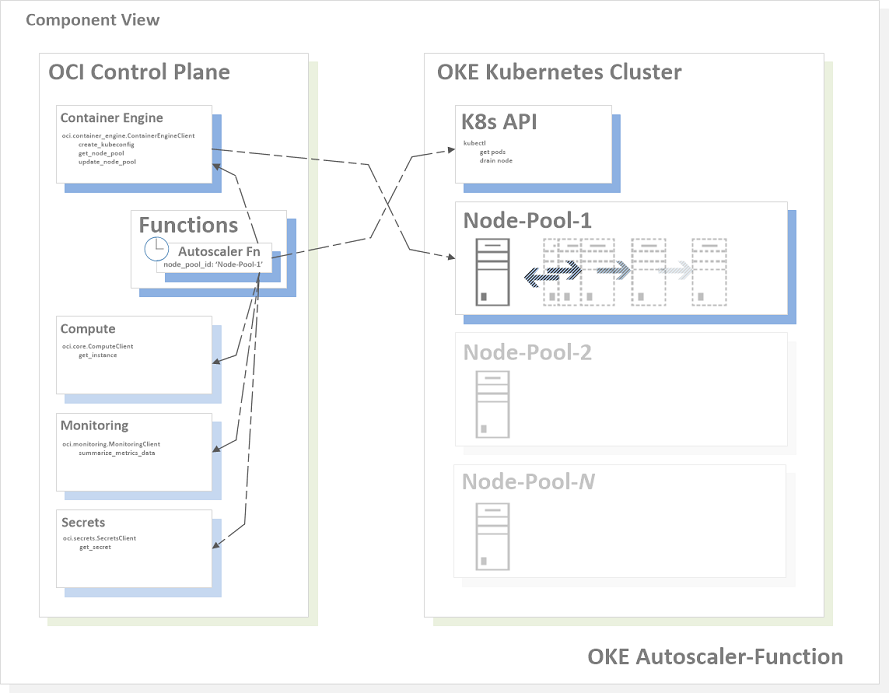

oke-autoscaler is implemented as an Oracle Function (i.e. an OCI managed serverless function):

- the Oracle Function itself is written in Python: oke-autoscaler/func.py

- the function uses a custom container image based on oraclelinux:7-slim, and also includes rh-python36, the OCI CLI, and kubectl: oke-autoscaler/Dockerfile

Evaluation Logic

The autoscaler function interacts with the Kubernetes API and a number of OCI control plane APIs to evaluate the state of the cluster, and the target node pool.

When the function is invoked, it follows an order of operation as follows:

- evaluates the state of the node pool, to ensure that the node pool is in a stable condition

- if the node pool is determined to be mutating (e.g. in the process of either adding or deleting a node, or stabilizing after adding a node), the autoscaler function will exit without performing any further operation

- if the node pool is in a stable condition, scale-up is evaluated first

- if scale-up is not triggered, the autoscaler function evaluates for scale-down (where scale-down has been enabled by the cluster administrator)

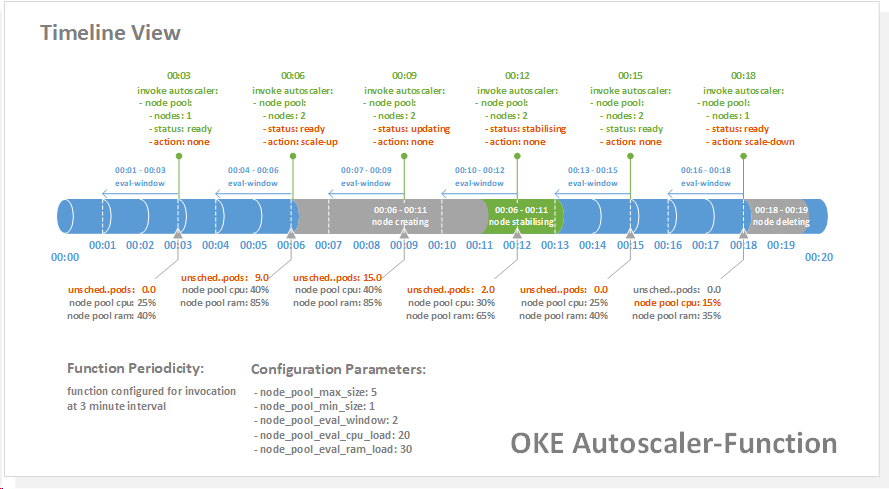

Scheduling

The oke-autoscaler function is designed to be invoked on a recurring schedule. The periodicity by which the function is scheduled to be invoked is configurable. As a starting point, consider scheduling the oke-autoscaler function to be invoked at an interval of every 3 minutes.

Once invoked, the function will run in accordance with the Evaluation Logic described herein.

A dimension to consider is the length of the window of time that the function will use to calculate the average resource utilization in the node pool.

The cluster administrator assigns a value to the function custom configuration parameter node_pool_eval_window. The oke-autoscaler function implements node_pool_eval_window as the number of minutes over which to:

- calculate the average CPU & RAM utilization for the node pool when evaluating for scale-down

node_pool_eval_window is by default is intended to represent the number of minutes between each invocation of the function, i.e. the periodicity of the function. In the case where the oke-autoscaler function is scheduled for invocation every 3 minutes, setting node_pool_eval_window to 3 minutes will configure the oke-autoscaler function to use the 3 minutes lapsed since the previous invocation as the window of time to evaluate and node pool utilization metrics.

node_pool_eval_window does not need to match the function invocation schedule, and can be set to calculate resource utilization over either a longer or shorter window of time.

Scaling

The cluster administrator defines what the node pool maximum and minimum number of nodes should be. The oke-autoscaler function operates within these boundaries.

Scale-Up

The oke-autoscaler function queries the cluster to enumerate the number of pods for the given node pool which are in a Pending state, and are flagged as being in an Unschedulable condition.

The number of pods flagged as being Unschedulable will be greater than zero when there are insufficient resources in the cluster on which to schedule pods. This condition will trigger the oke-autoscaler function to scale-up the node pool by adding an additional node.

The scale-up feature is enabled by default.

Stabilization

During the period immediately after a new node becomes active in a node pool, the cluster may still report some pods as being Unschedulable - despite the fact that the cluster has introduced enough resource to support the desired state.

In order to prevent a premature scale-up event, the oke-autoscaler function implements a node pool stabilization window to fence off the node pool from any modification during the period immediately after the addition of a new node. The node pool stabilization widow is implemented to afford the cluster the required time to schedule the backlog of unschedulable pods to the new node.

Where the oke-autoscaler function is invoked during the stabilization window, and it detects the presence of Unschedulable pods - a scale-up event will not be triggered.

Scale-Down

The cluster administrator defines as a percentage, the average node pool CPU and/or RAM utilization that below which the autoscaler function will scale-down the node pool by deleting a node.

The oke-autoscaler function calculates the node pool average CPU and RAM utilization as a mean score expressed as a percentage, which is derived by obtaining average utilization across all nodes in the node pool.

The calculated node pool averages for CPU and/or RAM utilization are evaluated against the percentage based thresholds provided by the cluster administrator.

If the node pool average CPU and/or RAM utilization is less than the thresholds provided by the cluster administrator, the node pool will scale-down.

Administrator defined scale-down thresholds can be set to evaluate for:

- node pool average CPU utilization

- node pool average RAM utilization

- either node pool average CPU or RAM utilization

When a specified scale-down condition is met, the oke-autoscaler function will cordon and drain the worker node, then calls the Container Engine API to delete the node from the node pool.

Enabling the scale-down feature is optional.

Multiple Node Pools

The oke-autoscaler function supports clusters which contain multiple node pools. For a given cluster hosting multiple node pools, oke-autoscaler functions can be enabled for one or more of the associated node pools.

Function Return Data

The function will return a JSON array containing summary data describing node pool status, any action and associated result.

Function completed successfully - no action performed as no resource pressure:

Result: {

"success": {

"action": "none",

"reason": "no-resource-pressure",

"node-pool-name": "prod-pool1",

"node-pool-status": "ready",

"node-count": "2.0"

}

} Function completed successfully - no action performed as node pool is stabilizing:

Result: {

"success": {

"action": "none",

"reason": "node-pool-status",

"node-pool-name": "prod-pool1",

"node-pool-status": "stabilizing",

"unschedulable-pods-count": "4.0",

"node-count": "2.0"

}

} Function completed successfully - scale-up:

Result: {

"success": {

"action": "scale-up",

"reason": "unschedulable-pods",

"unschedulable-pods-count": "10.0",

"node-pool-name": "prod-pool1",

"node-pool-status": "ready",

"node-count": "3.0"

}

}Function completed with warning - scale-up, max node count limit reached:

Result: {

"warning": {

"action": "none",

"reason": "node-max-limit-reached",

"unschedulable-pods-count": "10.0",

"node-pool-name": "prod-pool1",

"node-pool-status": "ready",

"node-count": "3.0"

}

} Function completed successfully - scale-down, low CPU utilization:

Result: {

"success": {

"action": "scale-down",

"reason": "cpu",

"node-pool-name": "prod-pool1",

"node-pool-status": "ready",

"node-count": "3.0"

}

}Function failed - missing user input data:

Result: {

"error": {

"reason": "missing-input-data"

}

}Function Log Data

The oke-autoscaler function has been configured to provide some basic logging regarding it's operation.

The following excerpt illustrates the function log data relating to a single oke-autoscaler function invocation:

Node Lifecycle State:

ACTIVE

ACTIVE

...

...

Node Data: {

"availability_domain": "xqTA:US-ASHBURN-AD-1",

"fault_domain": "FAULT-DOMAIN-2",

"id": "ocid1.instance.oc1.iad.anuwcljrp7nzmjiczjcacpmcg6lw7p2hlpk5oejlocl2qugqn3rxlqlymloq",

"lifecycle_details": "",

"lifecycle_state": "ACTIVE",

"name": "oke-c3wgoddmizd-nrwmmzzgy2t-sfcf3hk5x2a-1",

"node_error": null,

"node_pool_id": "ocid1.nodepool.oc1.iad.aaaaaaaaae3tsyjtmq3tan3emyydszrqmyzdkodgmuzgcytbgnrwmmzzgy2t",

"private_ip": "10.0.0.92",

"public_ip": "193.122.162.73",

"subnet_id": "ocid1.subnet.oc1.iad.aaaaaaaavnsn6hq7ogwpkragmzrl52dwp6vofkxgj6pvbllxscfcf3hk5x2a"

}

...

...

Nodes: {

"0": {

"name": "10.0.0.75",

"id": "ocid1.instance.oc1.iad.anuwcljrp7nzmjicknuodt727iawkx32unhc2kn53zrbrw7fubxexsamkf7q",

"created": "2020-05-20T11:50:04.988000+00:00",

"cpu_load": 2.3619126090991616,

"ram_load": 15.663938512292285

},

"1": {

"name": "10.0.0.92",

"id": "ocid1.instance.oc1.iad.anuwcljrp7nzmjiczjcacpmcg6lw7p2hlpk5oejlocl2qugqn3rxlqlymloq",

"created": "2020-05-24T05:33:14.121000+00:00",

"cpu_load": 3.01701506531393,

"ram_load": 14.896256379084324

}

}

...

...

Result: {

"success": {

"action": "none",

"reason": "no-resource-pressure",

"node-pool-name": "prod-pool1",

"node-pool-status": "ready",

"node-count": "2"

}

}Limitations

The oke-autoscaler function has the following limitations:

- This function should not be configured for invocation on a recurring schedule with an interval less than 2.5 minutes

- The function scale-down feature is not designed for use in clusters scheduling stateful workloads that utilise Local PersistentVolumes

- The function initiates a call to the Container Engine API

updateNodePool()to scale, which can take several minutes to complete - The function cannot wait for the

updateNodePool()operation to complete, the cluster administrator will need to monitor the success or failure of the operation outside of the function

If resources are deleted or moved when autoscaling your node pool, workloads might experience transient disruption. For example, if the workload consists of a controller with a single replica, that replica's pod might be rescheduled onto a different node if its current node is deleted.

Before enabling the autoscaler function scale-down feature, workloads should be configured to tolerate potential disruption, or to ensure that critical pods are not interrupted.

Conclusion

Node autoscaling is one of the key features provided by the Kubernetes cluster, and with the oke-autoscaler you can easily implement this functionality into an OKE cluster.

Head over to the oke-autoscaler code repository for necessary code and a detailed work instruction to start gaining the benefits of Kubernetes node autoscaling in your OKE clusters.

Cover Photo by Paweł Czerwiński on Unsplash.